I have been gradually improving my data wrangling tool, Easy Data Transform, putting out 70 public releases since 2019. While the product’s emphasis is on ease of use, rather than pure performance, I have been trying to make it fast as well, so it can cope with the multi-million row datasets customers like to throw at it. To see how I was doing, I did a simple benchmark of the most recent version of Easy Data Transform (v1.37.0) against several other desktop data wrangling tools. The benchmark did a read, sort, join and write of a 1 million row CSV file. I did the benchmarking on my Windows development PC and my Mac M1 laptop.

Here is an overview of the results:

Time by task (seconds), on Windows without Power Query (smaller is better):

I have left Excel Power Query off this graph, as it is so slow you can hardly see the other bars when it is included!

Time by task (seconds) on Mac (smaller is better):

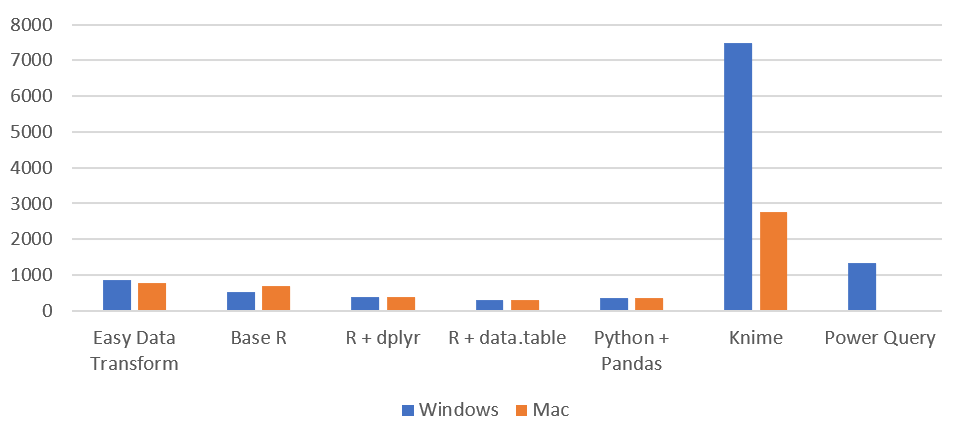

Memory usage (MB), Windows vs Mac (smaller is better):

So Easy Data Transform is nearly as fast as it’s nearest competitor, Knime, on Windows and a fair bit faster on an M1 Mac. It is also uses a lot less memory than Knime. However we have got some way to go to catch up with the Pandas library for Python and the data.table package for R, when it comes to raw performance. Hopefully I can get nearer to their performance in time. I was forbidden from including benchmarks for Tableau Prep and Alteryx by their licensing terms, which seems unnecessarily restrictive.

Looking at just the Easy Data Transform results, it is interesting to notice that a newish Macbook Air M1 laptop is significantly faster than a desktop AMD Ryzen 7 desktop PC from a few years ago.

See the full comparison:

Got some data to clean, merge, reshape or analyze? Why not download a free trial of Easy Data Transform ? No sign up required.

There is a story that a president of a pet food company ate some of his own dog food, to show how good it was. I’m not sure how tasty dog food really needs to be, given that dogs are happy to lick their own backsides. But his commitment is admirable. The least we can do as software developers is to use our own software as much as possible. After all, if you don’t use it, how can you expect anyone else to?

There is a story that a president of a pet food company ate some of his own dog food, to show how good it was. I’m not sure how tasty dog food really needs to be, given that dogs are happy to lick their own backsides. But his commitment is admirable. The least we can do as software developers is to use our own software as much as possible. After all, if you don’t use it, how can you expect anyone else to?